Projects

Current number of active grants supporting these projects is 27.Total value of awarded active grants : $11,205,024, direct + indirect costs

Agencies: ONR, NSF, Google, NIH, NASA, IES among others

all projects are highly collaborative, names are in alphabetical order

Babies and Machines and Visual Object Learning

Team: Sven Bambach, Jeremy Borjon, Elizabeth Clerkin, David Crandall, Hadar Karmazyn, Braden King, Lauren Slone, Linda B Smith, Umay Suanda, Chen Yu

Project 1: An Egocentric Perspective on Visual Object Learning in Toddlers and Exploring

Inter-Observer Differences in First-Person Object Views using Deep Learning Models

Led by: Sven Bambach and David Crandall

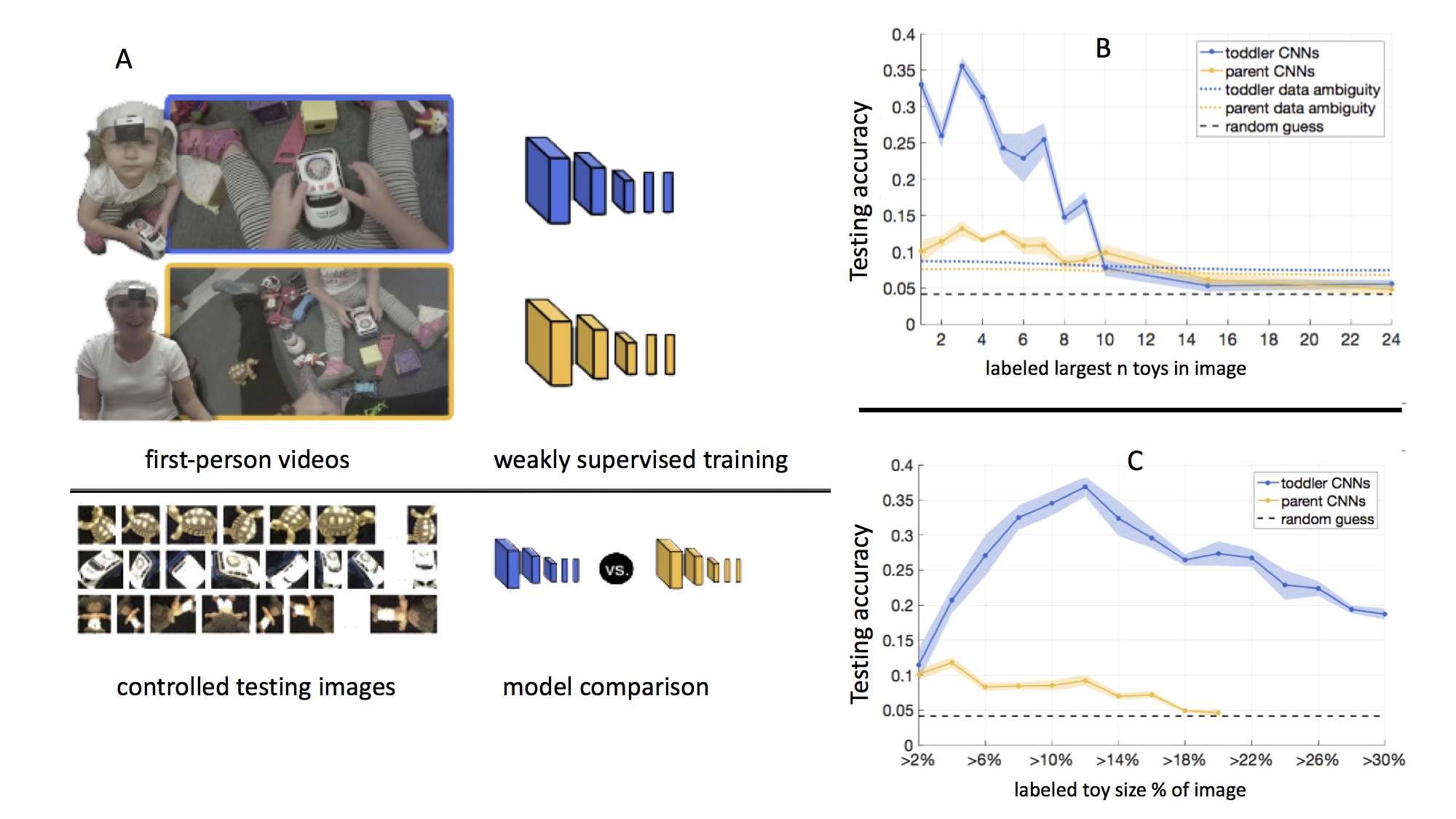

In this project we evaluate how the visual statistics of a toddler's

first-person view can facilitate visual object learning. We use raw

frames from head cameras on parents and adults to train machine

learning algorithms to recognize toy objects, and show that toddler

views lead to better object learning under various training paradigms.

This project led to a conference paper and talk at IEEE International

Conference on Development and Learning and Epigenetic Robotics

(ICDL-EPIROB) 2017

In this project we explore the potential of using deep learning models

as tools to study human vision with head-mounted cameras. We train

artificial neural network models based on individual subjects that all

explore the same set of objects, and visualize the neural activations

of the trained models, demonstrating that data from subjects who

created more diverse object views resulted in models that learn more

robust object representations. Our paper on this project has been

accepted to the Workshop on Mutual Benefits of Cognitive and Computer

Vision, which is part of the IEEE International Conference on Computer

Vision 2017 conference.

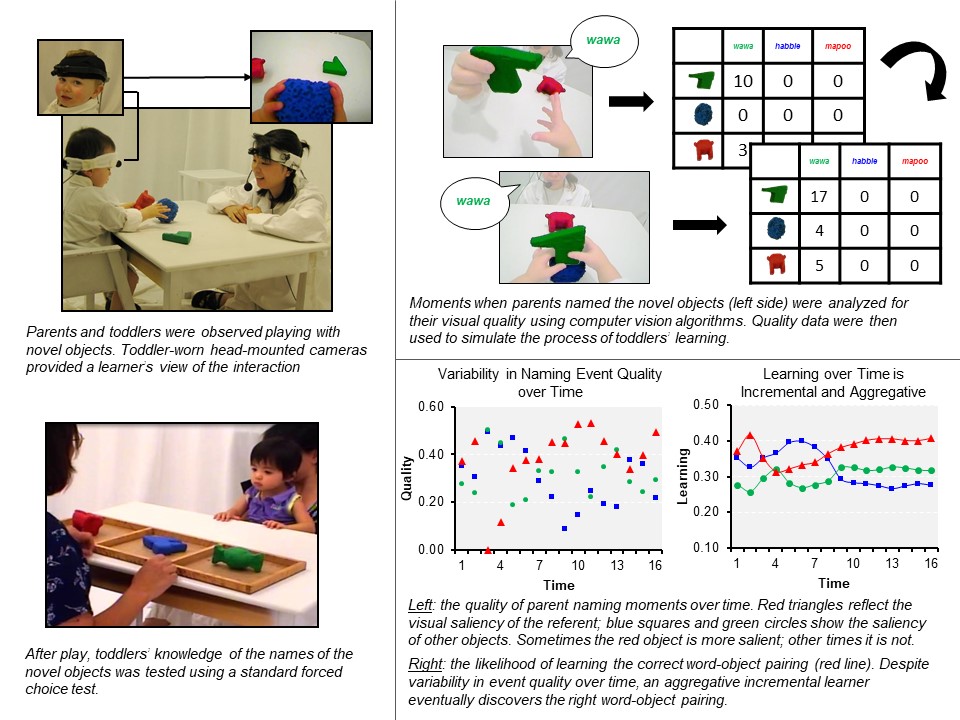

Project 2: What's the relevant data for toddlers' word learning?

Led by: Linda B Smith, Umay Suanda, Chen Yu

This project addresses a heavily debated issue in early language learning: do toddlers learn new words by exploiting a few highly informative moments of hearing an object's name (i.e., moments when the referent is transparent) or by aggregating many, many moments (including less informative ones). We examined the auditory (moments when parents named an object) and visual (the visual properties of the referent and other objects from head-mounted cameras) signal from observations of parent-toddler play with novel objects. We then analyzed this signal in relation to how well toddlers remembered which object names went with which objects. Micro, event-level analyses and simulation studies revealed that toddlers' learning is best characterized by a learning process that involves aggregating information across many moments. Although this learning process may be slow and error-prone in the short run, it builds a more robust lexicon in the long run.

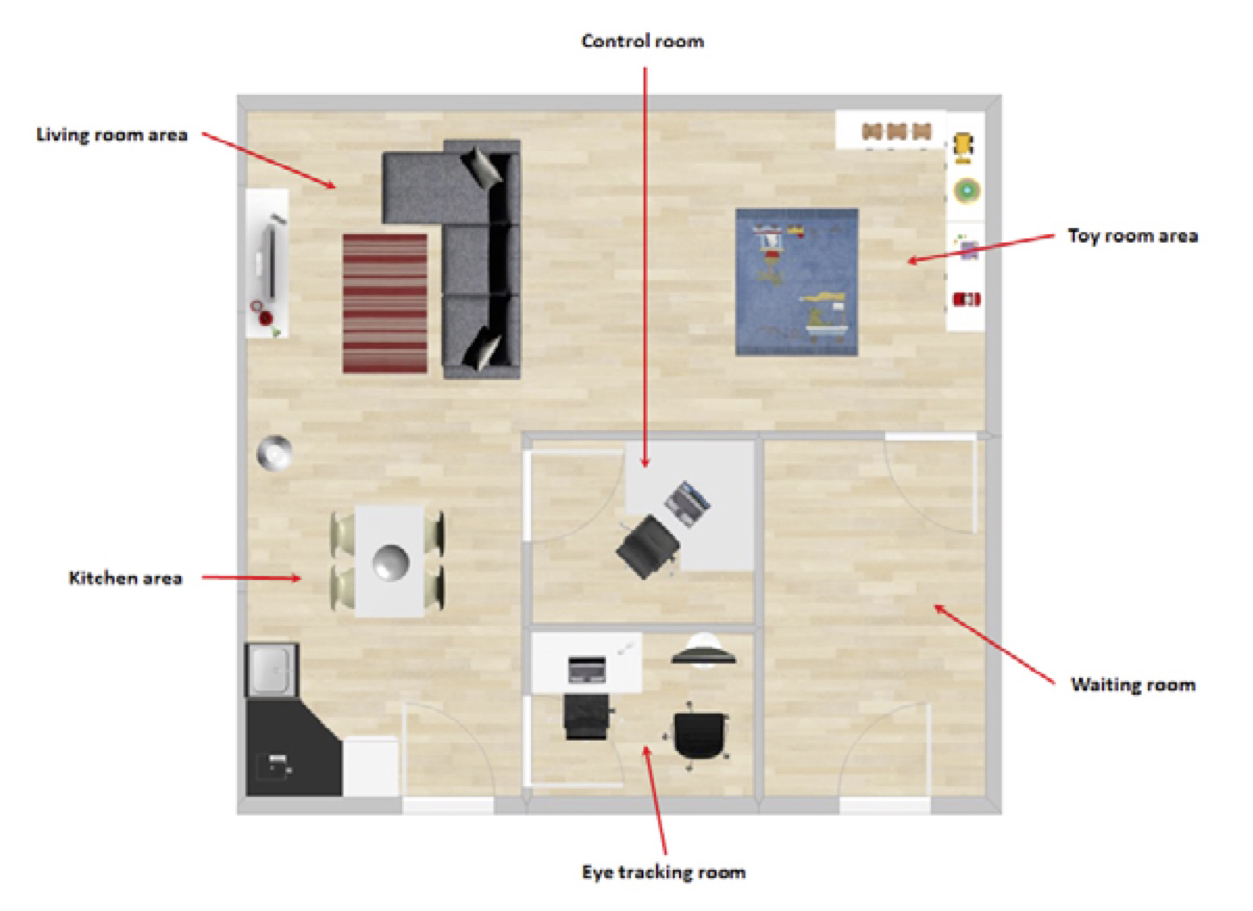

Project 3: HOME (Home-like Observational Multisensory Environment)

Led by: Jeremy Borjon, Linda B Smith, Chen Yu

HOME refers to a room with a state-of-the-art home-like environment equipped with cutting-edge sensing and computing technology. This environment allows us to measure and quantify parent-infant interactions in real time across multiple modalities and is specifically designed to encourage spontaneous, natural behavior in a context consistent with the clutter and noise of a typical home. The room is equipped to wirelessly capture head-mounted eye tracking, autonomic physiology, and track the motion of participants as they interact and behave in the room. This environment is the first of its kind to be primarily utilized for basic research to understand the fundamental principles of social interaction. Since HOME is meant to resemble an ordinary home environment, the findings will be readily and directly applicable to the real world.

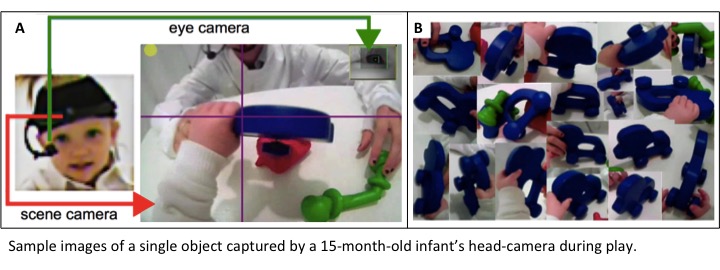

Project 4: Toddler's Manual and Visual Exploration of Objects during Play

Led by: Lauren Slone, Linda B Smith, Chen Yu

This project aims to characterize the nature of toddler's early visual experiences of objects and their relation to object manipulation and language abilities. Using head-mounted eye tracking, this study objectively measures individual differences in the moment-to-moment variability of visual instances of the same object, from infants' first-person views. One finding from this research is that infants who generated more variable visual object images through manual object manipulation at 15 months of age had larger productive vocabularies six months later. This is the first evidence that image-level object variability matters and may be the link that connects infant object manipulation to language development. View Papers

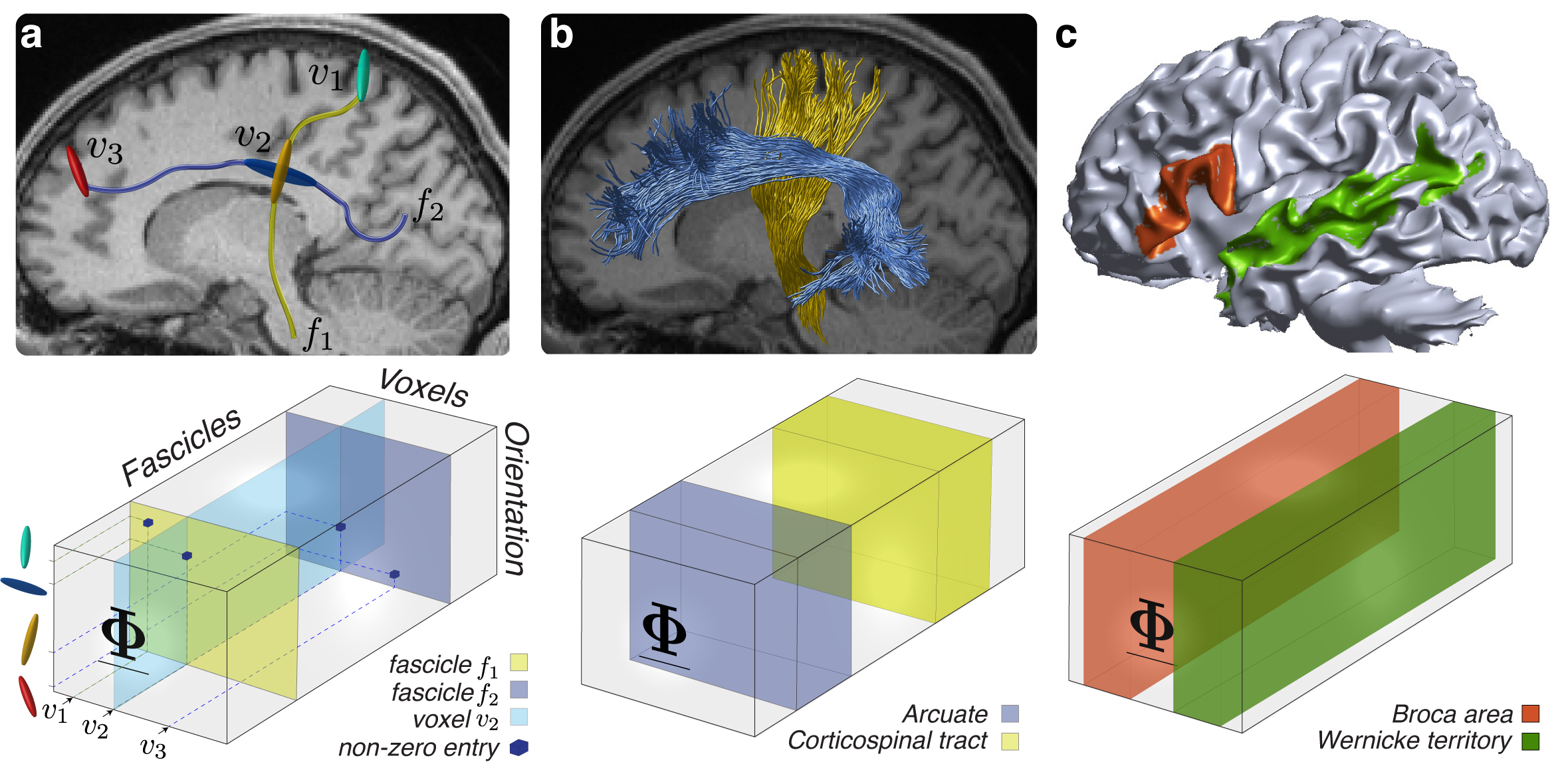

Multidimensional encoding of brain connectomes.

Team: Cesar Caiafa, Franco Pestilli, Andrew Saykin, Olaf Sporns

In this project we developed new computational methods for encoding brain data to support learning of brain network structure using neuroimaging data. We use multidimensional arrays and compress both brain data and computational models in compact data representations that can represent the anatomical relationship in the data and the model. These multidimensional models are light-weight and allow efficient anatomical operations to study the large-scale networks of the human brains. To date, this project has led to a conference paper and spot-light talk at NIPS (Neural Information Processing Systems, Caiafa, Saykin, Sporns and Pestilli NIPS 2017) and a Nature Scientific Reports article (Caiafa and Pestilli NSR 2017).

View PapersVisual learning and symbol systems: Math and Letter Development

Team: Rob Goldstone, Karin James, Tyler Marghetis, Sophia Vinci-Booher

Project 1: Visual experience during reading and the acquisition of number concepts

Led by: Rob Goldstone, Tyler Marghetis

A fundamental component of numerical understanding is the 'mental number line,' in which numbers are conceived as locations on a spatial path. Around the world, the mental number-line takes on different forms - for instance, sometimes going left-to-right, sometimes right-to-left - but the origins of this variability is not yet completely understood. Here, combining big data (a corpus of four million books) and a targeted dataset (a small corpus of children's literature), we are modeling early and lifelong visual experience with written numbers, to see whether low-level visual exposure to written numbers can account for the high-level structure and form of the mental number-line.

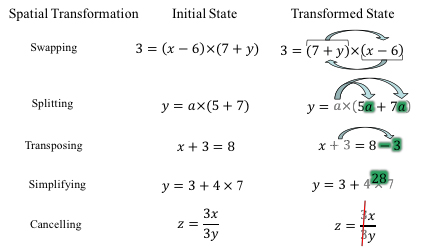

Led by: Rob Goldstone, Tyler Marghetis

In a series of lab experiments, we are exposing adults to a new computer-based algebra system. As they explore this new system, they self-generate a stream of sensorimotor information about algebraic notations. Results indicate that the best learners are those who generate highly variable streams of perceptual information - suggesting that self-generated perceptual variability might be a critical component in learning higher-level mathematical skills.

Project 3: Brain systems supporting letter writing and letter perception

Led by: Karin James, Sophia Vinci-Booher

Handwriting is a complex visual-motor behavior that leads to changes in visual perception and in the brain systems that support visual perception. Understanding the mechanisms through which handwriting contributes to these developmental changes is the focus of the project. The project integrates functional and diffusion MR imaging to understand the relationship between visual-motor behaviors, such as handwriting, and developmental changes in brain function, brain structure, and perception.

View Papers

Variability and Visual Learning

Team: Karen James, Daniel Plebanek, Sophia Vinci-Booher, Rob Goldstone, Thomas Gorman

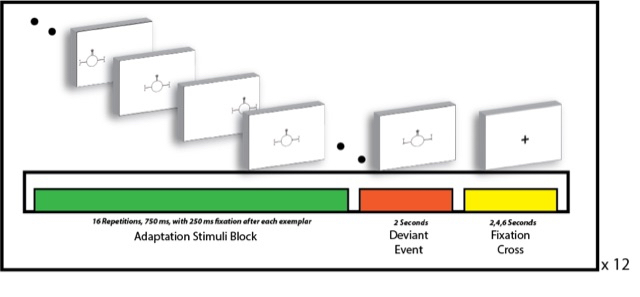

Project 1: The neural underpinnings of variability in category learning

Led by: Karin James and Dan Plebanek

Perceptual variability is often viewed as having multiple benefits in object learning and categorization. Despite the abundant results demonstrating these benefits such as increased transfer of knowledge, the neural mechanisms underlying variability as well as the developmental trajectories of how variability precipitates representational change are unknown. By manipulating individual's exposure to variability of novel, metrically organized categories during an fMRI-adaptation paradigm, we were able to quantify and manipulate variability to assess the functional differences between similarity and variability in category learning and generalization in adulthood and late childhood. During this study, participants were repeatedly exposed to category members from different distributions. After a period of adaptation, a deviant stimulus that differed from the expected distribution was then presented. Our results suggest developmental differences in the recruitment of the ventral temporal cortex during variable category learning. Furthermore, adults demonstrated input specific patterns of generalization, with broader categories being formed as a result of highly similar exposure and rule-specific categories as a result of more variable exposure. Children's neural activity, in contrast, suggested generalization only as a result of variable exposure. These results have important implications for the manner by which information about the world's structure can shape neural representations.

Project 2: Category structure and distributed representations

Led by: Karin James and Dan Plebanek

The world is full of structure and data such as the way that some category members or category features are more representative of the category as whole. Past research has demonstrated that individuals are quite adept at extracting this structure throughout development. However, traditional analyses of neural representations fail to capture the rich, underlying structure of the input. Current projects in the lab aim to capture effects of this structure by combining category scaling tasks with multivariate analyses in neuroimaging. We expect results will reveal how the underlying data inform neural representations.

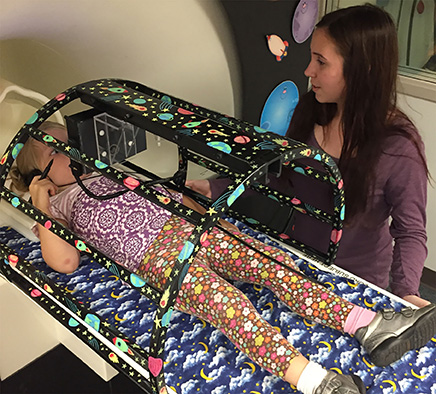

Project 3: The MRItab: A MR-compatible touchscreen with video-display

Led by: Karin James and Sophia Vinci-Booher

Interactive devices with touchscreen interfaces are ubiquitous in our daily lives - digital tablets are commonly used in schools to help young children learn and phones are the medium for a large amount of social communication - yet very little is known concerning how these interactions affect the brain in real time. Questions concerning the neural effects of interaction with these devices have been understudied because there has been no device that can function during brain imaging in the Magnetic Resonance Imaging (MRI) environment. We have developed the MRItab - the first interactive digital tablet designed for use in high electromagnetic field environments. The MRItab mimics digital tablets and phones, making it possible to measure brain activation changes during interaction with touchscreen devices in the MRI. With the help of the Johnson Center, we have obtained a provisional patent and the device has passed several safety requirements.

Electronic tablet for use in functional MRI, US Patent Application No. 62/370, 372, filed August 3, 2016, (Sturgeon, J., Shroyer, A., Vinci-Booher, S., & James, K.H., applicants). Amended February 4, 2019.

Project 4: The Benefits of Variability During Skill Training for Transfer

Led by: Thomas Gorman and Rob Goldstone

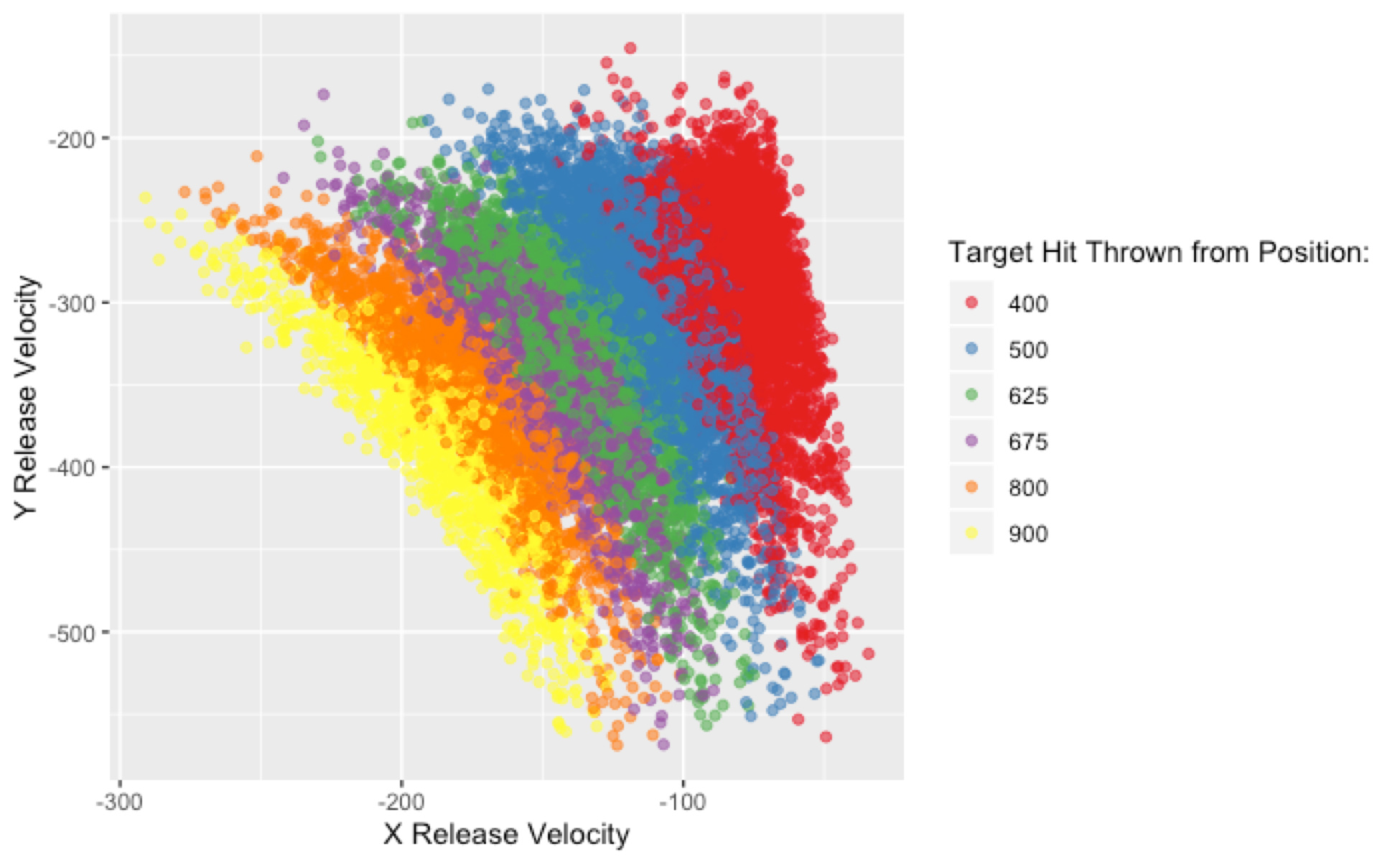

Exposing learners to variability during training has been demonstrated to produce improved performance in subsequent transfer testing. Such variability benefits are often accounted for by assuming that learners are developing some general task schema or structure. However much of this research has neglected to account for differences in similarity between varied and constant training conditions. In a between-groups manipulation, we trained participants on a simple projectile launching task, from either varied or constant conditions. We replicate previous findings showing a transfer advantage of varied over constant training. Furthermore, we show that a standard similarity-based model is insufficient to account for the benefits of variation, but, if the model is adjusted to assume that varied learners are tuned towards a broader generalization gradient, then a similarity-based model is sufficient to explain the benefits of variation. Our results therefore indicate that some variability benefits can be accounted for without positing the learning of abstract schemata or rules.

View Papers